| PolarSPARC |

Quick Primer on Llama.cpp

| Bhaskar S | *UPDATED*12/26/2025 |

Overview

llama.cpp is a powerful and efficient open source inference platform that enables one to run various Large Language Models (or LLM(s) for short) on a local machine.

The llama.cpp platform comes with a built-in Web UI interface that allows one to interact with the local LLM(s) via the provided web user interface. In addition, the platform exposes a local API endpoint, which enables app developers to build AI applications/workflows to interact with the local LLM(s) via the exposed API endpoint.

Last but not the least, the llama.cpp platform efficiently leverages the underlying system resouces of the local machine, such as the CPU(s) and the GPU(s), to optimally run the LLMs for better performance.

In this primer, we will demonstrate how one can effectively setup and run the llama.cpp platform using a Docker image.

Installation and Setup

The installation and setup will can on a Ubuntu 24.04 LTS based Linux desktop. Ensure that Docker is installed and setup on the desktop (see INSTRUCTIONS). Also, ensure the Python 3.1x programming language is installed and setup on the desktop.

We will create the required models directory by executing the following command in a terminal window:

$ mkdir -p $HOME/.llama_cpp/models

From the llama.cpp docker RESPOSITORY, one can identify the current version of the docker image. At the time of this article, the latest version of the docker image ended with the version b7524.

We require the docker image with the tag word full. If the desktop has an Nvidia GPU, one can look for the docker image with the tag words full-cuda.

To pull and download the full docker image for llama.cpp with CUDA support, execute the following command in a terminal window:

$ docker pull ghcr.io/ggml-org/llama.cpp:full-cuda-b7524

The following should be the typical output:

full-cuda-b7524: Pulling from ggml-org/llama.cpp bccd10f490ab: Pull complete edd1dba56169: Pull complete e06eb1b5c4cc: Pull complete 7f308a765276: Pull complete 3af11d09e9cd: Pull complete 42896cdfd7b6: Pull complete 600519079558: Pull complete 0ae42424cadf: Pull complete 73b7968785dc: Pull complete 4fbc369d043d: Pull complete 76db7eb25496: Pull complete cfc283de0b19: Pull complete 4f4fb700ef54: Pull complete 8c5e4a3b9157: Pull complete Digest: sha256:614d1b70a777b4914f5a928fdde72d6602c3b5bde6114c446dbe1c4d4e51d2d1 Status: Downloaded newer image for ghcr.io/ggml-org/llama.cpp:full-cuda-b7524 ghcr.io/ggml-org/llama.cpp:full-cuda-b7524

To install the necessary Python packages, execute the following command:

$ pip install dotenv langchain langchain-core langchain-openai pydantic

This completes all the system installation and setup for the llama.cpp hands-on demonstration.

Hands-on with llama.cpp

Before we get started, we need to determine all the available llama.cpp command(s). To determine the supported command(s), execute the following command:

$ docker run --rm --name llama_cpp ghcr.io/ggml-org/llama.cpp:full-cuda-b7524 --help

The following should be the typical output:

Unknown command: --help

Available commands:

--run (-r): Run a model (chat) previously converted into ggml

ex: -m /models/7B/ggml-model-q4_0.bin

--run-legacy (-l): Run a model (legacy completion) previously converted into ggml

ex: -m /models/7B/ggml-model-q4_0.bin -no-cnv -p "Building a website can be done in 10 simple steps:" -n 512

--bench (-b): Benchmark the performance of the inference for various parameters.

ex: -m model.gguf

--perplexity (-p): Measure the perplexity of a model over a given text.

ex: -m model.gguf -f file.txt

--convert (-c): Convert a llama model into ggml

ex: --outtype f16 "/models/7B/"

--quantize (-q): Optimize with quantization process ggml

ex: "/models/7B/ggml-model-f16.bin" "/models/7B/ggml-model-q4_0.bin" 2

--all-in-one (-a): Execute --convert & --quantize

ex: "/models/" 7B

--server (-s): Run a model on the server

ex: -m /models/7B/ggml-model-q4_0.bin -c 2048 -ngl 43 -mg 1 --port 8080

We want to leverage llama.cpp to serve an LLM model for inference, so we will use the --server command.

To determine all the options for the --server command, execute the following command:

$ docker run --rm --name llama_cpp ghcr.io/ggml-org/llama.cpp:full-cuda-b7524 --server --help

The output will be very long so will NOT show it here.

The next step is to identify the Nvidia GPU device(s) on the desktop. To identify the CUDA device(s), execute the following command:

$ docker run --rm --name llama_cpp --gpus all ghcr.io/ggml-org/llama.cpp:full-cuda-b7524 --server --list-devices

The following should be the typical output:

ggml_cuda_init: GGML_CUDA_FORCE_MMQ: no ggml_cuda_init: GGML_CUDA_FORCE_CUBLAS: no ggml_cuda_init: found 1 CUDA devices: Device 0: NVIDIA GeForce RTX 4060 Ti, compute capability 8.9, VMM: yes load_backend: loaded CUDA backend from /app/libggml-cuda.so load_backend: loaded CPU backend from /app/libggml-cpu-haswell.so Available devices: CUDA0: NVIDIA GeForce RTX 4060 Ti (15944 MiB, 15079 MiB free)

From the Output.3 above, the CUDA device is CUDA0.

The following table summarizes the commonly used options with the --server command:

| Option | Description |

|---|---|

| --model | Specifies the path to the LLM model GGUF file being served |

| --n-gpu-layers | Sets the number of layers to offload to the GPU device. If not set (or set to 0), the model runs on the CPU |

| --device | Specifies the backend device to use |

| --predict | The maximum number of tokens to generate. The default is -1 which indicates infinite generation |

| --temperature | Controls the creativity and randomness of the output. Lower values indicate more deterministic output |

| --top-k | Sets sampling where only tokens whose cumulative probability exceeds the specified threshold |

| --ctx-size | Sets the number of tokens that the model can remember at any time during output text generation |

| --flash-attn | Enables flash attention for faster inference. The default value is 'auto' |

| --alias | Specifies the model name that can be used in the APIs. Default is the model name |

| --host | Indicates the host to use for the server |

| --port | Indicates the port to use for the server |

| --threads | Indicates the number of CPU threads to use during generation. Default is all CPU threads |

| --log-timestamps | Enable timestamps in log messages |

For the hands-on demostrations, we will download and use two models from Huggingface - first is the Gemma 3N 4B LLM model and the other is the Gemma 3 4B LLM model.

We will start with the Gemma 3N 4B LLM model. To download and serve the desired LLM model, execute the following command in the terminal window:

$ docker run --rm --name llama_cpp --gpus all --network host -v $HOME/.llama_cpp/models:/root/.cache/llama.cpp ghcr.io/ggml-org/llama.cpp:full-cuda-b7524 --server --hf-repo unsloth/gemma-3n-E4B-it-GGUF --host 192.168.1.25 --port 8000 --device CUDA0 --temp 0.2 --log-timestamps

The following should be the typical trimmed output:

ggml_cuda_init: GGML_CUDA_FORCE_MMQ: no

ggml_cuda_init: GGML_CUDA_FORCE_CUBLAS: no

ggml_cuda_init: found 1 CUDA devices:

Device 0: NVIDIA GeForce RTX 4060 Ti, compute capability 8.9, VMM: yes

load_backend: loaded CUDA backend from /app/libggml-cuda.so

load_backend: loaded CPU backend from /app/libggml-cpu-haswell.so

common_download_file_single_online: no previous model file found /root/.cache/llama.cpp/unsloth_gemma-3n-E4B-it-GGUF_gemma-3n-E4B-it-Q4_K_M.gguf

common_download_file_single_online: trying to download model from https://huggingface.co/unsloth/gemma-3n-E4B-it-GGUF/resolve/main/gemma-3n-E4B-it-Q4_K_M.gguf to /root/.cache/llama.cpp/unsloth_gemma-3n-E4B-it-GGUF_gemma-3n-E4B-it-Q4_K_M.gguf.downloadInProgress (server_etag:"362b2c2e867b05e30a9315c640fd66ae953005ea1d2c40b8ce37b0066603a8f2", server_last_modified:)...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 1345 100 1345 0 0 33315 0 --:--:-- --:--:-- --:--:-- 33315

100 4328M 100 4328M 0 0 98.4M 0 0:00:43 0:00:43 --:--:-- 98.8M

main: n_parallel is set to auto, using n_parallel = 4 and kv_unified = true

build: 7524 (5ee4e43f2) with GNU 11.4.0 for Linux x86_64

system info: n_threads = 8, n_threads_batch = 8, total_threads = 16

system_info: n_threads = 8 (n_threads_batch = 8) / 16 | CUDA : ARCHS = 500,610,700,750,800,860,890 | USE_GRAPHS = 1 | PEER_MAX_BATCH_SIZE = 128 | CPU : SSE3 = 1 | SSSE3 = 1 | AVX = 1 | AVX2 = 1 | F16C = 1 | FMA = 1 | BMI2 = 1 | LLAMAFILE = 1 | OPENMP = 1 | REPACK = 1 |

init: using 15 threads for HTTP server

start: binding port with default address family

main: loading model

srv load_model: loading model '/root/.cache/llama.cpp/unsloth_gemma-3n-E4B-it-GGUF_gemma-3n-E4B-it-Q4_K_M.gguf'

common_init_result: fitting params to device memory, for bugs during this step try to reproduce them with -fit off, or provide --verbose logs if the bug only occurs with -fit on

llama_params_fit_impl: projected to use 5174 MiB of device memory vs. 15944 MiB of free device memory

llama_params_fit_impl: will leave 9910 >= 1024 MiB of free device memory, no changes needed

llama_params_fit: successfully fit params to free device memory

llama_params_fit: fitting params to free memory took 0.41 seconds

llama_model_load_from_file_impl: using device CUDA0 (NVIDIA GeForce RTX 4060 Ti) (0000:04:00.0) - 15084 MiB free

llama_model_loader: loaded meta data with 51 key-value pairs and 847 tensors from /root/.cache/llama.cpp/unsloth_gemma-3n-E4B-it-GGUF_gemma-3n-E4B-it-Q4_K_M.gguf (version GGUF V3 (latest))

.....[ TRIM ].....

main: model loaded

main: server is listening on http://192.168.1.25:8000

main: starting the main loop...

srv update_slots: all slots are idle

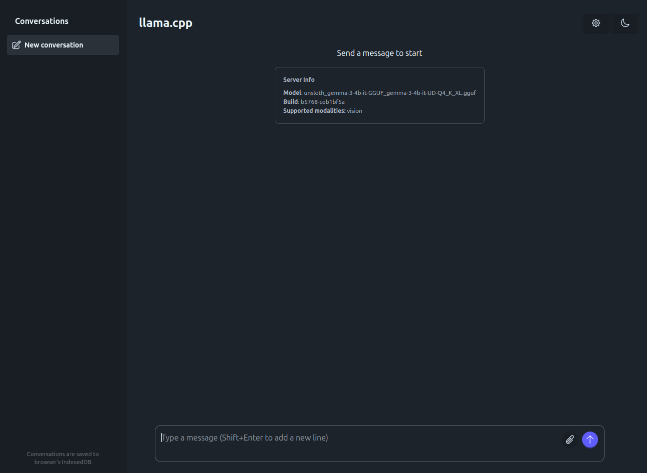

Now, launch the Web Browser and open the URL http://192.168.1.25:8000. The following illustration depicts the llama.cpp user interface:

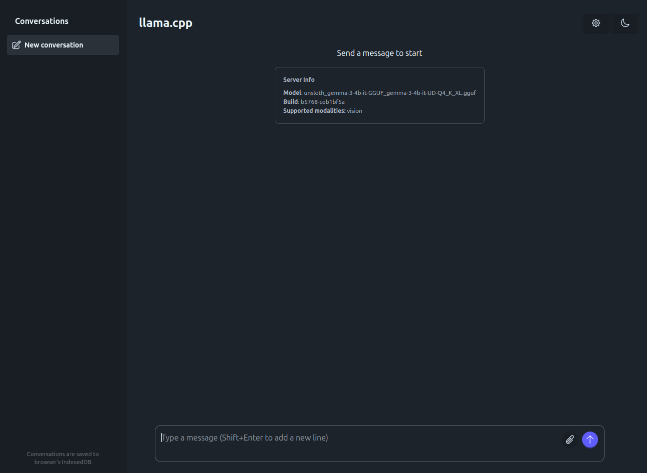

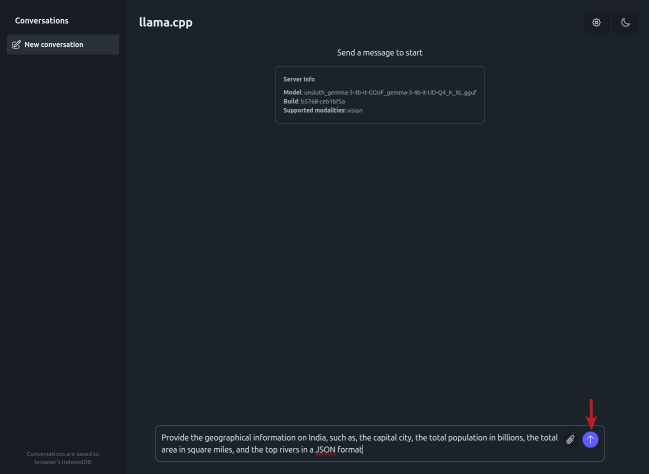

Enter the user prompt in the text box at the bottom and click on the circled UP arrow (indicated by the red arrow) as shown in the illustration below:

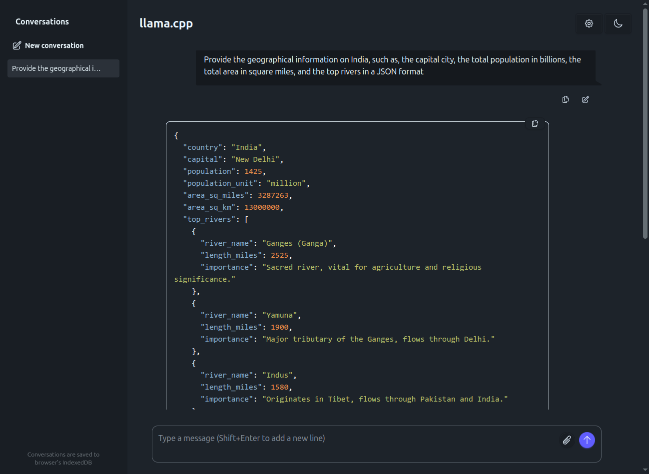

The response from the LLM is as shown in the illustration below:

Next, enter the user prompt (for solving a logical puzzle) in the text box at the bottom and click on the circled UP arrow (indicated by the red arrow) as shown in the illustration below:

The response from the LLM is as shown in the illustration below:

Exit the browser and stop the llama.cpp server running in the terminal window.

Shifting gears, we will now move to the Gemma 3 4B LLM model. To download and serve the desired LLM model, execute the following command in the terminal window:

$ docker run --rm --name llama_cpp --gpus all --network host -v $HOME/.llama_cpp/models:/root/.cache/llama.cpp ghcr.io/ggml-org/llama.cpp:full-cuda-b7524 --server --hf-repo unsloth/gemma-3-4b-it-GGUF:Q4_K_XL --host 192.168.1.25 --port 8000 --device CUDA0 --temp 0.2 --log-timestamps

The following should be the typical trimmed output:

ggml_cuda_init: GGML_CUDA_FORCE_CUBLAS: no

ggml_cuda_init: found 1 CUDA devices:

Device 0: NVIDIA GeForce RTX 4060 Ti, compute capability 8.9, VMM: yes

load_backend: loaded CUDA backend from /app/libggml-cuda.so

load_backend: loaded CPU backend from /app/libggml-cpu-haswell.so

common_download_file_single_online: no previous model file found /root/.cache/llama.cpp/unsloth_gemma-3-4b-it-GGUF_gemma-3-4b-it-UD-Q4_K_XL.gguf

common_download_file_single_online: trying to download model from https://huggingface.co/unsloth/gemma-3-4b-it-GGUF/resolve/main/gemma-3-4b-it-UD-Q4_K_XL.gguf to /root/.cache/llama.cpp/unsloth_gemma-3-4b-it-GGUF_gemma-3-4b-it-UD-Q4_K_XL.gguf.downloadInProgress (server_etag:"a855cedae6f99feeb0ff5f7e46eb02d03a3db3a206034065509e809f7e89ea45", server_last_modified:)...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 1343 100 1343 0 0 50992 0 --:--:-- --:--:-- --:--:-- 50992

100 2426M 100 2426M 0 0 100M 0 0:00:24 0:00:24 --:--:-- 100M

common_download_file_single_online: no previous model file found /root/.cache/llama.cpp/unsloth_gemma-3-4b-it-GGUF_mmproj-F16.gguf

common_download_file_single_online: trying to download model from https://huggingface.co/unsloth/gemma-3-4b-it-GGUF/resolve/main/mmproj-F16.gguf to /root/.cache/llama.cpp/unsloth_gemma-3-4b-it-GGUF_mmproj-F16.gguf.downloadInProgress (server_etag:"2182a97cbae2f01c910d358024e46f26303ace2481dea400605a688fbebaaee2", server_last_modified:)...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 1315 100 1315 0 0 22712 0 --:--:-- --:--:-- --:--:-- 22712

100 811M 100 811M 0 0 104M 0 0:00:07 0:00:07 --:--:-- 106M

main: n_parallel is set to auto, using n_parallel = 4 and kv_unified = true

build: 7524 (5ee4e43f2) with GNU 11.4.0 for Linux x86_64

system info: n_threads = 8, n_threads_batch = 8, total_threads = 16

system_info: n_threads = 8 (n_threads_batch = 8) / 16 | CUDA : ARCHS = 500,610,700,750,800,860,890 | USE_GRAPHS = 1 | PEER_MAX_BATCH_SIZE = 128 | CPU : SSE3 = 1 | SSSE3 = 1 | AVX = 1 | AVX2 = 1 | F16C = 1 | FMA = 1 | BMI2 = 1 | LLAMAFILE = 1 | OPENMP = 1 | REPACK = 1 |

init: using 15 threads for HTTP server

start: binding port with default address family

main: loading model

srv load_model: loading model '/root/.cache/llama.cpp/unsloth_gemma-3-4b-it-GGUF_gemma-3-4b-it-UD-Q4_K_XL.gguf'

common_init_result: fitting params to device memory, for bugs during this step try to reproduce them with -fit off, or provide --verbose logs if the bug only occurs with -fit on

llama_params_fit_impl: projected to use 6019 MiB of device memory vs. 15944 MiB of free device memory

llama_params_fit_impl: will leave 8889 >= 1024 MiB of free device memory, no changes needed

llama_params_fit: successfully fit params to free device memory

llama_params_fit: fitting params to free memory took 0.37 seconds

llama_model_load_from_file_impl: using device CUDA0 (NVIDIA GeForce RTX 4060 Ti) (0000:04:00.0) - 14908 MiB free

llama_model_loader: loaded meta data with 40 key-value pairs and 444 tensors from /root/.cache/llama.cpp/unsloth_gemma-3-4b-it-GGUF_gemma-3-4b-it-UD-Q4_K_XL.gguf (version GGUF V3 (latest))

.....[ TRIM ].....

main: model loaded

main: server is listening on http://192.168.1.25:8000

main: starting the main loop...

srv update_slots: all slots are idle

Once again, launch the Web Browser and open the URL http://192.168.1.25:8000.

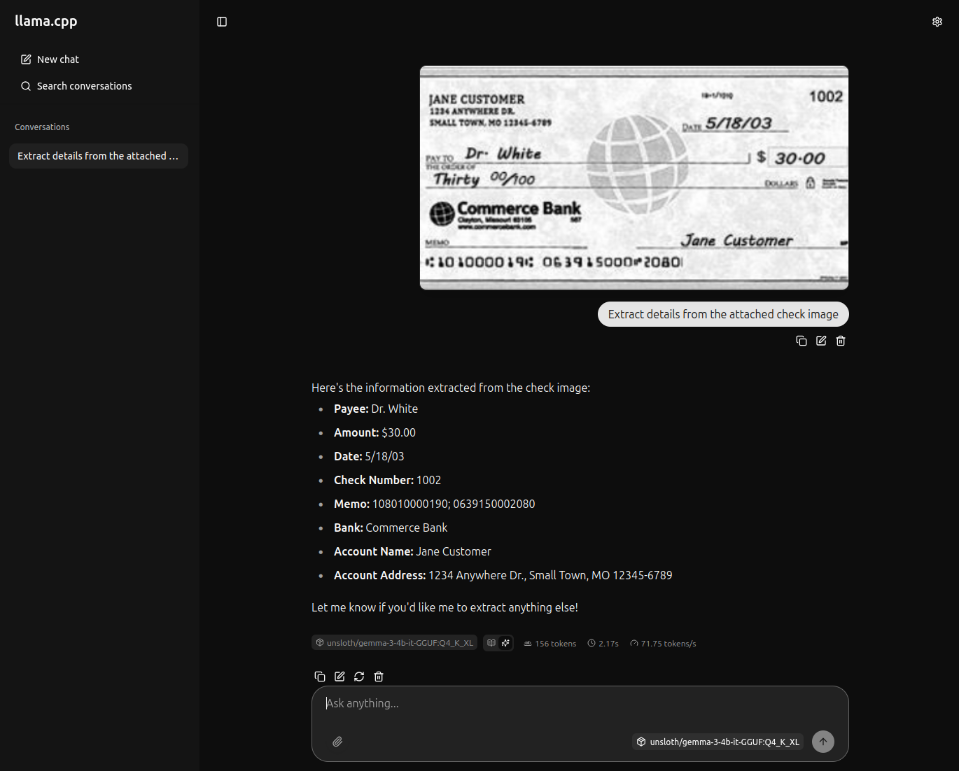

For the next task, we will use the bank check as shown in the illustration below:

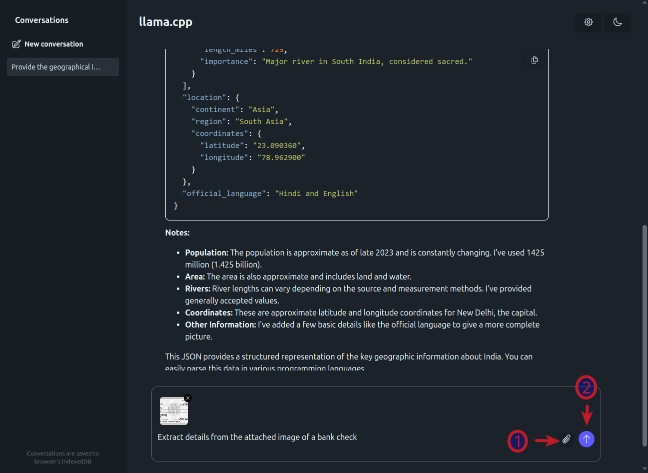

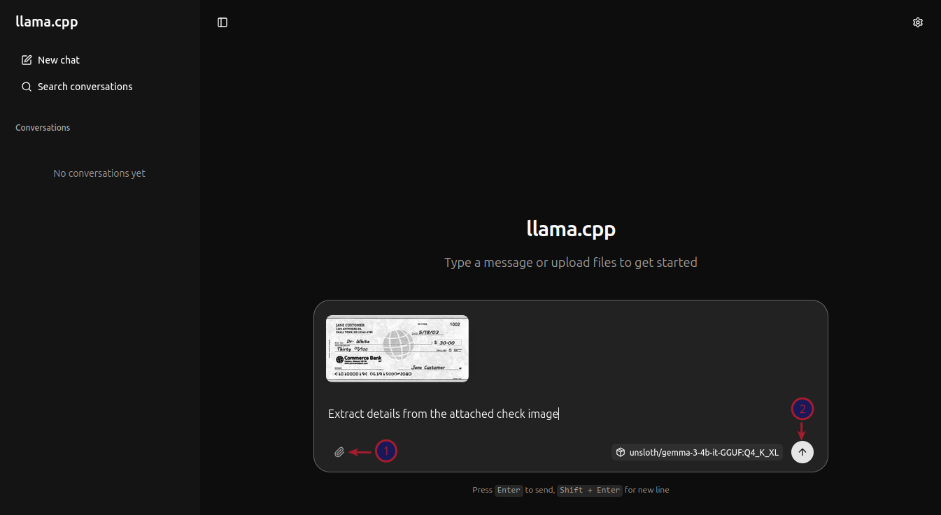

Click on the attachment icon (red number 1 arrow) to attach the above bank check image, then enter the user prompt in the text box at the bottom and click on the circled UP arrow (red number 2 arrow) as shown in the illustration below:

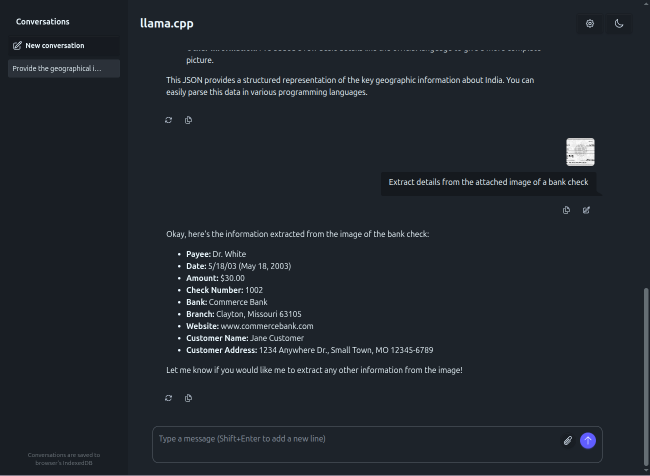

The response from the LLM after processing the bank check image is as shown in the illustration below:

Next, to test the llama.cpp inference platform via the API endpoint, execute the following user prompt in the terminal window:

$ curl -s -X POST "http://192.168.1.25:8000/completion" -H "Content-Type: application/json" -d '{"prompt": "Describe an ESP32 microcontroller in less than 100 words", "max_tokens": 150}' | jq

The following should be the typical output:

{

"index": 0,

"content": ".\n\nThe ESP32 is a powerful, low-cost microcontroller packed with Wi-Fi and Bluetooth capabilities. It's ideal for IoT projects, connecting devices to the internet, and creating smart home applications. Featuring a dual-core processor, it can handle complex tasks and supports various communication protocols. It's popular among hobbyists and professionals alike due to its versatility, ease of use, and extensive community support.\n",

"tokens": [],

"id_slot": 3,

"stop": true,

"model": "unsloth/gemma-3-4b-it-GGUF:Q4_K_XL",

"tokens_predicted": 88,

"tokens_evaluated": 15,

"generation_settings": {

"seed": 4294967295,

"temperature": 0.20000000298023224,

"dynatemp_range": 0.0,

"dynatemp_exponent": 1.0,

"top_k": 40,

"top_p": 0.949999988079071,

"min_p": 0.05000000074505806,

"top_n_sigma": -1.0,

"xtc_probability": 0.0,

"xtc_threshold": 0.10000000149011612,

"typical_p": 1.0,

"repeat_last_n": 64,

"repeat_penalty": 1.0,

"presence_penalty": 0.0,

"frequency_penalty": 0.0,

"dry_multiplier": 0.0,

"dry_base": 1.75,

"dry_allowed_length": 2,

"dry_penalty_last_n": 131072,

"dry_sequence_breakers": [

"\n",

":",

"\"",

"*"

],

"mirostat": 0,

"mirostat_tau": 5.0,

"mirostat_eta": 0.10000000149011612,

"stop": [],

"max_tokens": 150,

"n_predict": 150,

"n_keep": 0,

"n_discard": 0,

"ignore_eos": false,

"stream": false,

"logit_bias": [],

"n_probs": 0,

"min_keep": 0,

"grammar": "",

"grammar_lazy": false,

"grammar_triggers": [],

"preserved_tokens": [],

"chat_format": "Content-only",

"reasoning_format": "deepseek",

"reasoning_in_content": false,

"thinking_forced_open": false,

"samplers": [

"penalties",

"dry",

"top_n_sigma",

"top_k",

"typ_p",

"top_p",

"min_p",

"xtc",

"temperature"

],

"speculative.n_max": 16,

"speculative.n_min": 0,

"speculative.p_min": 0.75,

"timings_per_token": false,

"post_sampling_probs": false,

"lora": []

},

"prompt": "<bos>Describe an ESP32 microcontroller in less than 100 words",

"has_new_line": true,

"truncated": false,

"stop_type": "eos",

"stopping_word": "",

"tokens_cached": 102,

"timings": {

"cache_n": 0,

"prompt_n": 15,

"prompt_ms": 43.712,

"prompt_per_token_ms": 2.9141333333333335,

"prompt_per_second": 343.15519765739384,

"predicted_n": 88,

"predicted_ms": 1046.312,

"predicted_per_token_ms": 11.88990909090909,

"predicted_per_second": 84.10493237198848

}

}

We have successfully tested the exposed local API endpoint from the command-line !

Now, we will test llama.cpp using the Python Langchain API code snippets.

Create a file called .env with the following environment variables defined:

LLM_TEMPERATURE=0.2 LLAMA_CPP_BASE_URL='http://192.168.1.25:8000/v1' LLAMA_CPP_MODEL='unsloth/gemma-3-4b-it-GGUF:Q4_K_XL' LLAMA_CPP_API_KEY='llama_cpp'

To load the environment variables and assign them to corresponding Python variables, execute the following code snippet:

from dotenv import load_dotenv, find_dotenv

import os

load_dotenv(find_dotenv())

llm_temperature = os.getenv('LLM_TEMPERATURE')

llama_cpp_base_url = os.getenv('LLAMA_CPP_BASE_URL')

llama_cpp_model = os.getenv('LLAMA_CPP_MODEL')

llama_cpp_api_key = os.getenv('LLAMA_CPP_API_KEY')

To initialize an instance of the LLM client class for OpenAI running on the host URL, execute the following code snippet:

from langchain_openai import ChatOpenAI llm_openai = ChatOpenAI( model=llama_cpp_model, base_url=llama_cpp_base_url, api_key=llama_cpp_api_key, temperature=float(llm_temperature) )

To get a text response for a user prompt from the Gemma 3 4B LLM model running on the llama.cpp inference platform, execute the following code snippet:

from langchain_core.prompts import ChatPromptTemplate

prompt = ChatPromptTemplate.from_messages([

('system', 'You are a helpful assistant'),

('human', '{input}'),

])

chain = prompt | llm_openai

response = chain.invoke({'input': 'Compare the GDP of India vs USA in 2024 and provide the response in JSON format'})

response.content

The following should be the typical output:

'```json\n{\n "comparison": "GDP Comparison - India vs. USA (2024 - Estimates)",\n "date": "October 26, 2023 (Based on latest available estimates and projections)",\n "disclaimer": "Figures are estimates and projections, subject to change based on economic conditions and revisions by international organizations. Data is primarily sourced from the World Bank, IMF, and Trading Economics.",\n "data": {\n "country": "United States",\n "gdp_2024_estimate": {\n "value": 27.64 trillion,\n "unit": "USD (United States Dollars)",\n "source": "IMF (October 2023)",\n "currency_type": "USD"\n },\n "country": "India",\n "gdp_2024_estimate": {\n "value": 13.96 trillion,\n "unit": "USD (United States Dollars)",\n "source": "IMF (October 2023)",\n "currency_type": "USD"\n },\n "comparison_summary": {\n "percentage_difference": "The US GDP is approximately 2.08 times larger than India\'s GDP in 2024.",\n "percentage_change_india": "India\'s GDP is projected to grow by approximately 6.3% in 2024.",\n "percentage_change_usa": "The US GDP is projected to grow by approximately 2.1% in 2024."\n },\n "notes": [\n "These are nominal GDP figures (current prices).",\n "GDP per capita (average economic output per person) is significantly higher in the United States.",\n "Economic forecasts are inherently uncertain and subject to revision."\n ]\n }\n}\n```\n\n**Explanation of the JSON:**\n\n* **`comparison`**: A brief title for the comparison.\n* **`date`**: The date the information was compiled (based on available estimates).\n* **`disclaimer`**: Important note about the estimates and their potential for change.\n* **`data`**: Contains the GDP figures and sources.\n * **`country`**: The name of the country.\n * **`gdp_2024_estimate`**: Details about the GDP estimate for that year.\n * **`value`**: The GDP value in USD.\n * **`unit`**: The unit of measurement (USD).\n * **`source`**: The organization that provided the estimate (IMF in this case).\n * **`currency_type`**: The currency type.\n * **`comparison_summary`**: Provides a concise summary of the comparison.\n * **`percentage_difference`**: Calculates the percentage difference between the two countries\' GDPs.\n * **`percentage_change_india`**: The projected percentage change in India\'s GDP.\n * **`percentage_change_usa`**: The projected percentage change in the USA\'s GDP.\n* **`notes`**: Additional context and caveats.\n\n**Important Considerations:**\n\n* **Estimates:** As noted in the disclaimer, these are *estimates* for 2024. Final figures will be released later in the year and may vary.\n* **Sources:** I\'ve primarily used the IMF\'s World Economic Outlook (October 2023) as my source. You can find the full report on the IMF website. Other sources like the World Bank and Trading Economics were consulted for corroboration.\n* **Nominal vs. Real GDP:** This response uses *nominal* GDP (current prices). *Real* GDP (adjusted for inflation) would provide a more accurate comparison of economic growth over time.\n\nTo get the most up-to-date information, I recommend checking the following resources:\n\n* **International Monetary Fund (IMF):** [https://www.imf.org/](https://www.imf.org/)\n* **World Bank:** [https://www.worldbank.org/](https://www.worldbank.org/)\n* **Trading Economics:** [https://tradingeconomics.com/](https://tradingeconomics.com/)'

For the next task, we will attempt to present the LLM model response in a structured form using a Pydantic data class. For that, we will first define a class object by executing the following code snippet:

from pydantic import BaseModel, Field class GeographicInfo(BaseModel): country: str = Field(description="Name of the Country") capital: str = Field(description="Name of the Capital City") population: int = Field(description="Population of the country in billions") land_area: int = Field(description="Land Area of the country in square miles") list_of_rivers: list = Field(description="List of top 5 rivers in the country")

To receive a LLM model response in the desired format for the specific user prompt from the llama.cpp platform, execute the following code snippet:

struct_llm_openai = llm_openai.with_structured_output(GeographicInfo)

chain = prompt | struct_llm_openai

response = chain.invoke({'input': 'Provide the geographic information of India and include the capital city, population in billions, land area in square miles, and list of rivers'})

response

The following should be the typical output:

GeographicInfo(country='India', capital='New Delhi', population=1428000000, land_area=3287263, list_of_rivers=[{'river_name': 'Ganges (Ganga)', 'significance': 'Sacred river, central to Hindu religion and culture; major waterway for transportation and irrigation.'}, {'river_name': 'Yamuna', 'significance': 'Major tributary of the Ganges, flows through Delhi and Agra.'}, {'river_name': 'Indus', 'significance': 'Historically important river, now largely in Pakistan; significant for irrigation and water resources.'}, {'river_name': 'Brahmaputra', 'significance': 'Originates in Tibet, flows through India and Bangladesh; vital for agriculture and flood control.'}, {'river_name': 'Narmada', 'significance': 'Considered sacred, flows through central India; important for irrigation and pilgrimage.'}, {'river_name': 'Godavari', 'significance': 'Major river in South India, considered sacred; important for agriculture and religious festivals.'}, {'river_name': 'Krishna', 'significance': 'Major river in South India, crucial for irrigation and water supply.'}, {'river_name': 'Mahanadi', 'significance': 'Important river in eastern India, flows through Odisha; vital for irrigation and fisheries.'}, {'river_name': 'Kaveri (Cauvery)', 'significance': 'Major river in southern India, important for agriculture and water resources.'}, {'river_name': 'Tapi', 'significance': 'Flows through western India, used for irrigation and hydroelectric power.'}])

With this, we conclude the various demonstrations on using the llama.cpp platform for running and working with the pre-trained LLM model(s) locally !!!

References