| PolarSPARC |

Quick Primer on Ollama

| Bhaskar S | *UPDATED*01/31/2026 |

Overview

Ollama is a powerful open source platform that simplifies the process of running various Large Language Models (or LLM s for short) on cloud (Ollama's cloud service) as well as on a local machine. It enables one to download the various pre-trained LLM models such as, Alibaba Qwen 3, DeepSeek-R1, Google Gemma-3, IBM Granite 4, Microsoft Phi-4, OpenAI GPT-OSS, etc., and use them for building AI applications.

In this article, we will *ONLY* demonstrate the setup and usage on a local machine.

The Ollama platform also exposes an API endpoint, which enables developers to build agentic AI applications that can interact with the LLM(s) using the API endpoint.

Last but not the least, the Ollama platform effectively leverages the underlying hardware resouces of the machine, such as CPU(s) and GPU(s), to efficiently and optimally run the LLMs for better performance.

In this primer, we will demonstrate how one can effectively setup and run the Ollama platform using the Docker image on a local machine.

Installation and Setup

The installation and setup will can on a Ubuntu 24.04 LTS based Linux desktop OR a Apple Silicon based Macbook Pro. Ensure that Docker is installed and setup on the desktop (see instructions).

For Linux and MacOS, ensure that the Python 3.1x programming language as well as the Jupyter Notebook packages are installed. In addition, ensure the command-line utilities curl and jq are installed.

For Linux and MacOS, we will setup two required directories by executing the following command in a terminal window:

$ mkdir -p $HOME/.ollama

For Linux and MacOS, to pull and download the required docker image for Ollama, execute the following command in a terminal window:

$ docker pull ollama/ollama:0.15.2

The following should be the typical output:

0.15.2: Pulling from ollama/ollama a3629ac5b9f4: Pull complete 26f128d62ae6: Pull complete 5201cf8bac07: Pull complete 9c4ac0f18dea: Pull complete Digest: sha256:76d0c003b27db65170e88ab319ff19c9bcee41ecb8177c52ab06921dfa13abdf Status: Downloaded newer image for ollama/ollama:0.15.2 docker.io/ollama/ollama:0.15.2

For Linux and MacOS, to install the necessary Python packages, execute the following command:

$ pip install dotenv ollama pydantic

Note that by default, docker on MacOS is ONLY configured to use upto 8GB of RAM !!!

The following are the steps to adjust the docker resource usage configuration on MacOS:

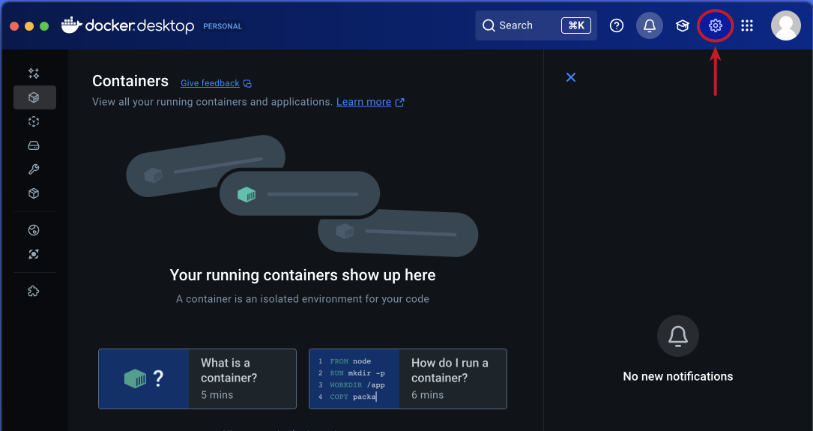

Open the Docker Desktop on MacOS and click on the Settings gear icon as shown in the following illustration:

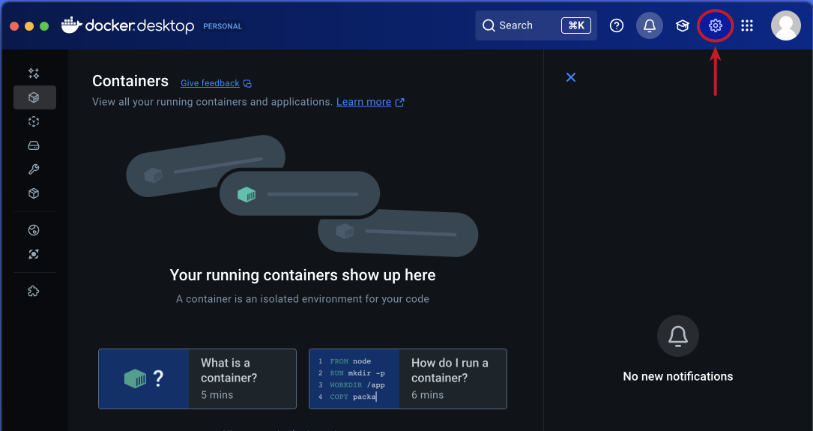

Click on the Resources item from the options on the left-hand side as shown in the following illustration:

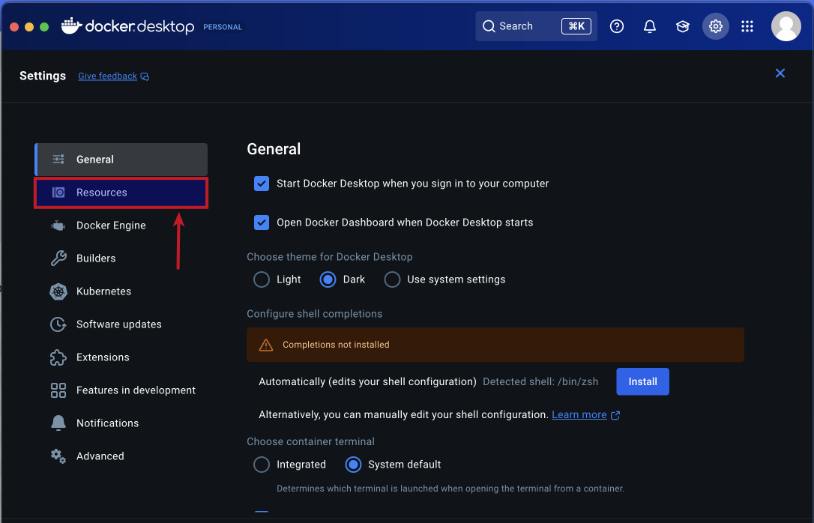

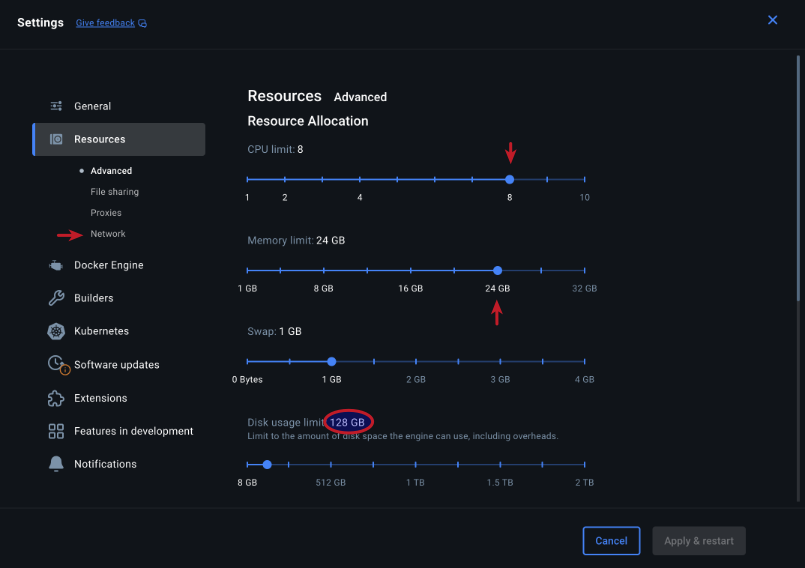

Choose the CPU, Memory, and Disk Usage limits and then click on the Network item under Resources on the left-hand side as shown in the following illustration:

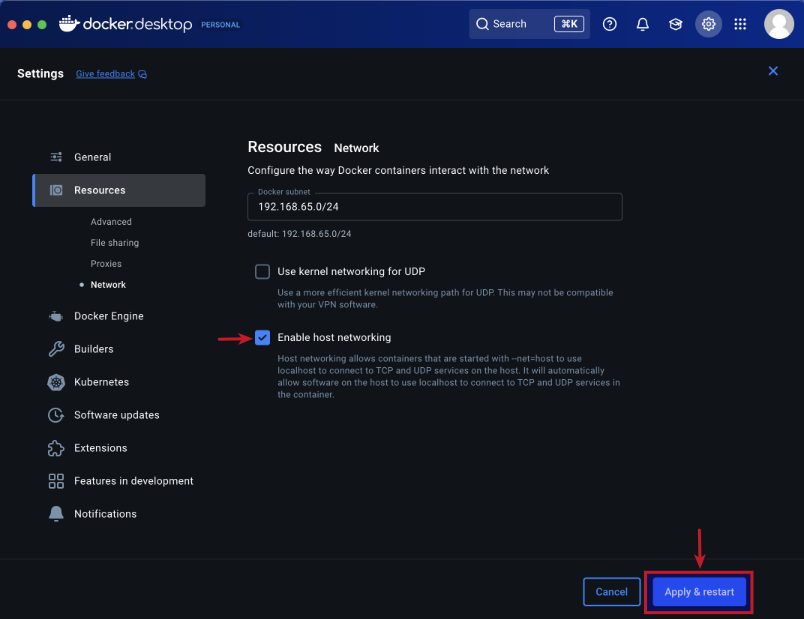

Choose the Enable Host Networking option and then click on the Apply & Restart button as shown in the following illustration:

Finally, reboot the MacOS system for the changes to take effect.

This completes all the system installation and setup for the Ollama hands-on demonstration.

Hands-on with Ollama

In the following sections, we will show the commands for both Linux and MacOS, however, we will ONLY show the output from Linux. Note that all the commands have been tested on both Linux and MacOS respectively.

Assuming that the ip address on the Linux desktop is 192.168.1.25, start the Ollama platform by executing the following command in the terminal window:

$ docker run --rm --name ollama -p 192.168.1.25:11434:11434 -v $HOME/.ollama:/root/.ollama ollama/ollama:0.15.2

For MacOS, start the Ollama platform by executing the following command in the terminal window:

$ docker run --rm --name ollama -p 11434:11434 -v $HOME/.ollama:/root/.ollama ollama/ollama:0.15.2

The following should be the typical output:

time=2026-01-31T18:24:45.628Z level=INFO source=routes.go:1631 msg="server config" env="map[CUDA_VISIBLE_DEVICES: GGML_VK_VISIBLE_DEVICES: GPU_DEVICE_ORDINAL: HIP_VISIBLE_DEVICES: HSA_OVERRIDE_GFX_VERSION: HTTPS_PROXY: HTTP_PROXY: NO_PROXY: OLLAMA_CONTEXT_LENGTH:4096 OLLAMA_DEBUG:INFO OLLAMA_FLASH_ATTENTION:false OLLAMA_GPU_OVERHEAD:0 OLLAMA_HOST:http://0.0.0.0:11434 OLLAMA_KEEP_ALIVE:5m0s OLLAMA_KV_CACHE_TYPE: OLLAMA_LLM_LIBRARY: OLLAMA_LOAD_TIMEOUT:5m0s OLLAMA_MAX_LOADED_MODELS:0 OLLAMA_MAX_QUEUE:512 OLLAMA_MODELS:/root/.ollama/models OLLAMA_MULTIUSER_CACHE:false OLLAMA_NEW_ENGINE:false OLLAMA_NOHISTORY:false OLLAMA_NOPRUNE:false OLLAMA_NUM_PARALLEL:1 OLLAMA_ORIGINS:[http://localhost https://localhost http://localhost:* https://localhost:* http://127.0.0.1 https://127.0.0.1 http://127.0.0.1:* https://127.0.0.1:* http://0.0.0.0 https://0.0.0.0 http://0.0.0.0:* https://0.0.0.0:* app://* file://* tauri://* vscode-webview://* vscode-file://*] OLLAMA_REMOTES:[ollama.com] OLLAMA_SCHED_SPREAD:false OLLAMA_VULKAN:false ROCR_VISIBLE_DEVICES: http_proxy: https_proxy: no_proxy:]" time=2026-01-31T18:24:45.634Z level=INFO source=images.go:473 msg="total blobs: 43" time=2026-01-31T18:24:45.635Z level=INFO source=images.go:480 msg="total unused blobs removed: 0" time=2026-01-31T18:24:45.635Z level=INFO source=routes.go:1684 msg="Listening on [::]:11434 (version 0.15.2)" time=2026-01-31T18:24:45.635Z level=INFO source=runner.go:67 msg="discovering available GPUs..." time=2026-01-31T18:24:45.635Z level=INFO source=server.go:429 msg="starting runner" cmd="/usr/bin/ollama runner --ollama-engine --port 42727" time=2026-01-31T18:24:45.652Z level=INFO source=server.go:429 msg="starting runner" cmd="/usr/bin/ollama runner --ollama-engine --port 35017" time=2026-01-31T18:24:45.669Z level=INFO source=runner.go:106 msg="experimental Vulkan support disabled. To enable, set OLLAMA_VULKAN=1" time=2026-01-31T18:24:45.669Z level=INFO source=types.go:60 msg="inference compute" id=cpu library=cpu compute="" name=cpu description=cpu libdirs=ollama driver="" pci_id="" type="" total="62.7 GiB" available="62.7 GiB" time=2026-01-31T18:24:45.669Z level=INFO source=routes.go:1725 msg="entering low vram mode" "total vram"="0 B" threshold="20.0 GiB"

If the linux desktop has Nvidia GPU with decent amount of VRAM (at least 16 GB) and has been enabled for use with docker (see instructions), then execute the following command instead to start Ollama:

$ docker run --rm --name ollama --gpus=all -p 192.168.1.25:11434:11434 -v $HOME/.ollama:/root/.ollama ollama/ollama:0.15.2

On the MacOS, currently there is NO SUPPORT for the Apple Silicon GPU and the above command WILL NOT work !!!

For the hands-on demonstration, we will download and use three different pre-trained LLM models: the OpenAI GPT-OSS 20B, the Google Gemma-3 4B, the IBM Granite 4 Micro, and the Qwen-3 4B respectively.

Open a new terminal window (referred to as T-1), execute the following docker command to download the Alibaba Qwen 3 4B LLM model:

$ docker exec -it ollama ollama run qwen3:4b

The following should be the typical output:

pulling manifest pulling 163553aea1b1: 100% |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 2.6 GB pulling eb4402837c78: 100% |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 1.5 KB pulling d18a5cc71b84: 100% |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 11 KB pulling cff3f395ef37: 100% |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 120 B pulling 5efd52d6d9f2: 100% |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 487 B verifying sha256 digest writing manifest success

In terminal window T-1, to exit the user input, execute the following user prompt:

>>> /bye

Next, in terminal window T-1, execute the following docker command to download the IBM Granite 4 Micro LLM model:

$ docker exec -it ollama ollama run granite4:micro

The following should be the typical output:

pulling manifest pulling 6c02683809a8: 100% |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 2.1 GB pulling 0f6ec9740c76: 100% |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 7.1 KB pulling cfc7749b96f6: 100% |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 11 KB pulling ba32b08db168: 100% |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 417 B verifying sha256 digest writing manifest success

In terminal window T-1, to exit the user input, execute the following user prompt:

>>> /bye

Once again, in terminal window T-1, execute the following docker command to download the Google Gemma-3 4B LLM model:

$ docker exec -it ollama ollama run gemma3:4b

The following should be the typical output:

pulling manifest pulling ac71e9e32c0b... 100% |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 1.5 GB pulling 3da071a01bbe... 100% |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 6.6 KB pulling 4a99a6dd617d... 100% |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 11 KB pulling f9ed27df66e9... 100% |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 417 B verifying sha256 digest writing manifest success

In terminal window T-1, to exit the user input, execute the following user prompt:

>>> /bye

One last time, in terminal window T-1, execute the following docker command to download the OpenAI GPT-OSS 20B LLM model:

$ docker exec -it ollama ollama run gpt-oss:20b

The following should be the typical output:

pulling manifest pulling b112e727c6f1: 100% |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 13 GB pulling 51468a0fd901: 100% |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 7.4 KB pulling f60356777647: 100% |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 11 KB pulling d8ba2f9a17b3: 100% |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 18 B pulling 8d6fddaf04b2: 100% |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||| 489 B verifying sha256 digest writing manifest success

Open another new terminal window (referred to as T-2) and execute the following docker command to list all the downloaded LLM model(s):

$ docker exec -it ollama ollama list

The following should be the typical output:

NAME ID SIZE MODIFIED granite4:micro 89962fcc7523 2.1 GB 3 minutes ago gpt-oss:20b f2b8351c629c 13 GB 35 seconds ago qwen3:4b a383baf4993b 2.6 GB 4 minutes ago gemma3:4b c0494fe00251 3.3 GB 2 minutes ago

In the terminal window T-2, execute the following docker command to list the running LLM model:

$ docker exec -it ollama ollama ps

The following should be the typical output:

NAME ID SIZE PROCESSOR CONTEXT UNTIL gpt-oss:20b f2b8351c629c 14 GB 100% GPU 4096 4 minutes from now

As is evident from the Output.8 above, the OpenAI GPT-OSS 20B LLM model is fully loaded and running on the GPU.

In the terminal window T-2, execute the following docker command to display information about the specific LLM model:

$ docker exec -it ollama ollama show gpt-oss:20b

The following should be the typical output:

Model

architecture gptoss

parameters 20.9B

context length 131072

embedding length 2880

quantization MXFP4

Capabilities

completion

tools

thinking

Parameters

temperature 1

License

Apache License

Version 2.0, January 2004

...

To test the just downloaded OpenAI GPT-OSS 20B LLM model, execute the following user prompt in the terminal window T-1:

>>> describe a gpu in less than 50 words in json format

The following should be the typical output:

```json

{

"description": "A GPU is a specialized processor that accelerates graphics and parallel computations, enabling real-time

rendering, AI inference, and high-performance computing. It features thousands of cores, high memory bandwidth, and

programmable shaders."

}

```

On MacOS, the OpenAI GPT-OSS 20B LLM model is a little bit sluggish, but works - 11/08/2025 !!!

In the terminal window T-1, to exit the user input, execute the following user prompt:

>>> /bye

Now, we will shift gears to test the local API endpoint.

For Linux, open a new terminal window and execute the following command to list all the LLM models that are hosted in the running Ollama platform:

$ curl -s http://192.168.1.25:11434/api/tags | jq

For MacOS, open a new terminal window and execute the following command to list all the LLM models that are hosted in the running Ollama platform:

$ curl -s http://127.0.0.1:11434/api/tags | jq

The following should be the typical output on Linux:

{

"models": [

{

"name": "granite4:micro",

"model": "granite4:micro",

"modified_at": "2025-11-08T17:58:56.85174551Z",

"size": 2099521385,

"digest": "89962fcc75239ac434cdebceb6b7e0669397f92eaef9c487774b718bc36a3e5f",

"details": {

"parent_model": "",

"format": "gguf",

"family": "granite",

"families": [

"granite"

],

"parameter_size": "3.4B",

"quantization_level": "Q4_K_M"

}

},

{

"name": "gpt-oss:20b",

"model": "gpt-oss:20b",

"modified_at": "2025-08-05T23:50:42.593911502Z",

"size": 13780173839,

"digest": "f2b8351c629c005bd3f0a0e3046f905afcbffede19b648e4bd7c884cdfd63af6",

"details": {

"parent_model": "",

"format": "gguf",

"family": "gptoss",

"families": [

"gptoss"

],

"parameter_size": "20.9B",

"quantization_level": "MXFP4"

}

},

{

"name": "qwen3:4b",

"model": "qwen3:4b",

"modified_at": "2025-05-01T23:42:43.224705385Z",

"size": 2620788019,

"digest": "a383baf4993bed20dd7a61a68583c1066d6f839187b66eda479aa0b238d45378",

"details": {

"parent_model": "",

"format": "gguf",

"family": "qwen3",

"families": [

"qwen3"

],

"parameter_size": "4.0B",

"quantization_level": "Q4_K_M"

}

},

{

"name": "gemma3:4b",

"model": "gemma3:4b",

"modified_at": "2025-03-14T11:38:45.044730835Z",

"size": 3338801718,

"digest": "c0494fe00251c4fc844e6a1801f9cbd26c37441d034af3cb9284402f7e91989d",

"details": {

"parent_model": "",

"format": "gguf",

"family": "gemma3",

"families": [

"gemma3"

],

"parameter_size": "4.3B",

"quantization_level": "Q4_K_M"

}

}

]

}

From the Output.11 above, it is evident we have the four LLM models ready for use !

Moving along to the next task !

For Linux, to send a user prompt to the LLM model for a response, execute the following command:

$ curl -s http://192.168.1.25:11434/api/generate -d '{

"model": "gpt-oss:20b",

"prompt": "describe a gpu in less than 50 words",

"stream": false

}' | jq

For MacOS, to send a user prompt to the LLM model for a response, execute the following command:

$ curl -s http://127.0.0.1:11434/api/generate -d '{

"model": "gpt-oss:20b",

"prompt": "describe a gpu in less than 50 words",

"stream": false

}' | jq

The following should be the typical trimmed output:

{

"model": "gpt-oss:20b",

"created_at": "2025-11-08T18:17:56.833116988Z",

"response": "A GPU is a specialized processor that rapidly performs parallel calculations, powering graphics rendering, gaming visuals, and accelerating AI and scientific computations.",

"thinking": "The user: \"describe a GPU in less than 50 words\". Likely they want a concise description. We must keep below 50 words. Count words. Let's propose: \"A GPU is a specialized processor that rapidly performs parallel calculations, powering graphics rendering, gaming visuals, and accelerating AI and scientific computations.\" Count words: A(1) GPU(2) is(3) a(4) specialized(5) processor(6) that(7) rapidly(8) performs(9) parallel(10) calculations,(11) powering(12) graphics(13) rendering,(14) gaming(15) visuals,(16) and(17) accelerating(18) AI(19) and(20) scientific(21) computations.(22) So 22 words. Good.",

"done": true,

"done_reason": "stop",

"context": [

200006,

17360,

200008,

3575,

553,

...[ TRIM ]...

20837,

326,

19950,

192859,

13

],

"total_duration": 3527718974,

"load_duration": 194138693,

"prompt_eval_count": 76,

"prompt_eval_duration": 34232452,

"eval_count": 197,

"eval_duration": 3224810124

}

BAM - we have successfully tested the local API endpoints !

Now, we will test Ollama using Python code snippets.

Create a file called .env with the following environment variables defined:

LLM_TEMPERATURE=0.2 OLLAMA_BASE_URL='http://192.168.1.25:11434' OLLAMA_LANG_MODEL='gpt-oss:20b' OLLAMA_STRUCT_MODEL='qwen3:4b' OLLAMA_TOOLS_MODEL='granite4:micro' OLLAMA_VISION_MODEL='gemma3:4b' TEST_IMAGE='./data/test-image.png' RECEIPT_IMAGE='./data/test-receipt.jpg'

To load the environment variables and assign them to corresponding Python variables, execute the following code snippet:

from dotenv import load_dotenv, find_dotenv

import os

load_dotenv(find_dotenv())

llm_temperature = float(os.getenv('LLM_TEMPERATURE'))

ollama_base_url = os.getenv('OLLAMA_BASE_URL')

ollama_lang_model = os.getenv('OLLAMA_LANG_MODEL')

ollama_struct_model = os.getenv('OLLAMA_STRUCT_MODEL')

ollama_tools_model = os.getenv('OLLAMA_TOOLS_MODEL')

ollama_vision_model = os.getenv('OLLAMA_VISION_MODEL')

test_image = os.getenv('TEST_IMAGE')

receipt_image = os.getenv('RECEIPT_IMAGE')

To initialize an instance of the client class for Ollama running on the host URL, execute the following code snippet:

from ollama import Client client = Client(host=ollama_base_url)

To list all the LLM models that are hosted in the running Ollama platform, execute the following code snippet:

client.list()

The following should be the typical output:

ListResponse(models=[Model(model='granite4:micro', modified_at=datetime.datetime(2025, 11, 8, 17, 58, 56, 851745, tzinfo=TzInfo(0)), digest='89962fcc75239ac434cdebceb6b7e0669397f92eaef9c487774b718bc36a3e5f', size=2099521385, details=ModelDetails(parent_model='', format='gguf', family='granite', families=['granite'], parameter_size='3.4B', quantization_level='Q4_K_M')), Model(model='gpt-oss:20b', modified_at=datetime.datetime(2025, 8, 5, 23, 50, 42, 593911, tzinfo=TzInfo(0)), digest='f2b8351c629c005bd3f0a0e3046f905afcbffede19b648e4bd7c884cdfd63af6', size=13780173839, details=ModelDetails(parent_model='', format='gguf', family='gptoss', families=['gptoss'], parameter_size='20.9B', quantization_level='MXFP4')), Model(model='qwen3:4b', modified_at=datetime.datetime(2025, 5, 1, 23, 42, 43, 224705, tzinfo=TzInfo(0)), digest='a383baf4993bed20dd7a61a68583c1066d6f839187b66eda479aa0b238d45378', size=2620788019, details=ModelDetails(parent_model='', format='gguf', family='qwen3', families=['qwen3'], parameter_size='4.0B', quantization_level='Q4_K_M')), Model(model='gemma3:4b', modified_at=datetime.datetime(2025, 3, 14, 11, 38, 45, 44730, tzinfo=TzInfo(0)), digest='c0494fe00251c4fc844e6a1801f9cbd26c37441d034af3cb9284402f7e91989d', size=3338801718, details=ModelDetails(parent_model='', format='gguf', family='gemma3', families=['gemma3'], parameter_size='4.3B', quantization_level='Q4_K_M'))])

To get a text response for a user prompt from the OpenAI GPT-OSS 20B LLM model running on the Ollama platform, execute the following code snippet:

result = client.chat(model=ollama_lang_model,

options={'temperature': llm_temperature},

messages=[{'role': 'user', 'content': 'Describe ollama in less than 50 words'}])

result

The following should be the typical output:

ChatResponse(model='gpt-oss:20b', created_at='2025-11-08T18:35:43.572369722Z', done=True, done_reason='stop', total_duration=9404905650, load_duration=5932317012, prompt_eval_count=76, prompt_eval_duration=100271123, eval_count=194, eval_duration=3232223162, message=Message(role='assistant', content='Ollama is an open‑source framework that lets developers run and manage large language models on local hardware, offering easy installation, model switching, and API integration for on‑device AI applications.', thinking='We need to describe ollama in less than 50 words. Ollama is a platform for running large language models locally. Provide concise description. Let\'s craft: "Ollama is an open-source framework that lets developers run and manage large language models on local hardware, offering easy installation, model switching, and API integration for on-device AI applications." Count words: Ollama(1) is2 an3 open-source4 framework5 that6 lets7 developers8 run9 and10 manage11 large12 language13 models14 on15 local16 hardware,17 offering18 easy19 installation,20 model21 switching,22 and23 API24 integration25 for26 on-device27 AI28 applications. 28 words. Good.', images=None, tool_name=None, tool_calls=None))

For the next task, we will attempt to present the LLM model response in a structured form using a Pydantic data class. For that, we will first define a class object by executing the following code snippet:

from pydantic import BaseModel class GpuSpecs(BaseModel): name: str bus: str memory: int clock: int cores: int

To receive a LLM model response in the desired format for the specific user prompt from the Ollama platform, execute the following code snippet:

result = client.chat(model=ollama_struct_model,

options={'temperature': llm_temperature},

messages=[{'role': 'user', 'content': 'Extract the GPU specifications for popular GPU Nvidia RTX 4070 Ti'}],

format=GpuSpecs.model_json_schema())

To display the results in the structred form, execute the following code snippet:

rtx_4070 = (GpuSpecs.model_validate_json(result.message.content)) rtx_4070

The following should be the typical output:

GpuSpecs(name='Nvidia RTX 4070 Ti', bus='PCIe 4.0', memory=16, clock=1750, cores=16640)

Structured Output (via Pydantic) *FAILS* to work in OpenAI GPT-OSS 20B LLM model at this point in time - 11/08/2025 !!!

Moving along to the next task, we will now demonstrate the Optical Character Recognition (OCR) capabilities by processing the image of a Transaction Receipt !!!

Execute the following code snippet to define a method to convert a JPG image to base64 string, use it to convert the image of the receipt to a base64 string, and send a user prompt to the Ollama platform:

from io import BytesIO

from PIL import Image

import base64

def jpg_to_base64(image):

jpg_buffer = BytesIO()

pil_image = Image.open(image)

pil_image.save(jpg_buffer, format='JPEG')

return base64.b64encode(jpg_buffer.getvalue()).decode('utf-8')

result = client.chat(

model=ollama_vision_model,

messages=[

{

'role': 'user',

'content': 'Itemize all the transactions from this receipt image in detail',

'images': [jpg_to_base64(receipt_image)]

}

]

)

print(result['message']['content'])

Executing the above Python code snippet generates the following typical output:

Okay, here's a detailed breakdown of all the transactions listed in the receipt image:

**Darth Vader #1234: Total Transactions - $132.91**

* **Feb 17**

* Amazon MKTPL*N606Z9FA37om.com/billWA - $9.87

* Amazon MKTPL*L89WB21L8m3on.com/billWA - $29.99

* **Feb 21**

* Amazon RETA*C14EN8XC3WMM.AMAZON.COWA - $74.63

**Rey Skywalker #9876: Payments, Credits & Adjustments**

* **Feb 15**

* TJ MAX*0224LAWRENCVEILLEN - $21.31

* **Feb 15**

* Wegmans #93PRINCE TONNJ - $17.79

* **Feb 15**

* Patel Brothers East Windsornj - $77.75

* **Feb 15**

* TJ MAX*828EAST WINDSORNJ - $90.58

* **Feb 15**

* Wholefds PRN 1018PRINCE TONNJ - $6.48

* **Feb 15**

* Trader Joe S #607PRINCE TONNJ - $2.69

* **Feb 16**

* Shoprite Lawrncvlle S1LAWRENCVEILLEN - $30.16

* **Feb 18**

* Wegmans #93PRINCE TONNJ - $19.35

* **Feb 19**

* Halo Farmlawrencveillen - $13.96

**Total: $258.76**

Let me know if you'd like me to analyze any specific transaction further!

Finally to the next task, we will demonstrate the tool processing capabilities of the the Ollama platform.

Execute the following code snippet to create a custom tool for executing shell commands and reference it in the user prompt sent to the Ollama platform:

import subprocess

def execute_command(command: str) -> str:

"""

tool to execute a given command and output its result

Args:

command (str): The command to execute

Returns:

str: The output from the command execution

"""

print(f'Executing the command: {command}')

try:

result = subprocess.run(command, shell=True, check=True, text=True, capture_output=True)

if result.returncode != 0:

return f'Error executing the command - {command}'

return result.stdout

except subprocess.CalledProcessError as e:

print(e)

result = client.chat(

model=ollama_tools_model,

messages=[{'role': 'user', 'content': 'Execute the command "docker --version" if a tool is provided and display the output'}],

tools=[execute_command]

)

print(result['message']['tool_calls'])

if result.message.tool_calls:

for tool in result.message.tool_calls:

if tool.function.name == 'execute_command':

print(f'Ready to call Func: {tool.function.name} with Args: {tool.function.arguments}')

output = execute_command(**tool.function.arguments)

print(f'Func output: {output}')

Executing the above Python code snippet generates the following typical output:

Ready to call Func: execute_command with Args: {'command': 'docker --version'}

Executing the command: docker --version

Func output: Docker version 28.2.2, build 28.2.2-0ubuntu1~24.04.1

With this, we conclude the various demonstrations on using the Ollama platform for running and working with the pre-trained LLM models locally !!!

References